Portfolio Rebuild

AI‑Accelerated “Vibe Coding” delivered architectural stability and minimized design‑to‑dev latency.

Over six months, I rebuilt my product design practice around AI, not as a gimmick, but as a system. I used Google AI Studio to vibe-code my portfolio from aesthetic intent, and VS Code + Copilot to harden code for production. I used Figma Make to create a complete application prototype, plus generated specs and markdown requirements. By treating prompts as requirements and contracts instead of clever one-liners, I created a repeatable workflow that turned design ideas into working, shippable interfaces.

The result is a portfolio and process that can move up to 3× faster without sacrificing quality. A prompt-created design system of tokens and components keeps visual consistency near 97%, while lightweight admin tools make publishing and content ops autonomous. This case study shows how AI, when paired with senior UX designer oversight, markdown guardrails, and design systems, can deliver enterprise-grade results at startup speed.

Engineering high‑fidelity, hallucination‑resistant workflows to unlock 3× design velocity.

AI‑Accelerated “Vibe Coding” delivered architectural stability and minimized design‑to‑dev latency.

Concept‑to‑prototype in 13 days (70%+ faster than the 4–6 week baseline).

Repeatable Human‑in‑the‑Loop (HITL) QA steps ensure code and content precision at speed.

This chart visualizes the reduction in time required to deliver a demo‑ready prototype using the new workflow.

My portfolio build was a great way to really learn the tech and the tools. I can't remember a time when I was more excited about my job. We are not at the "designer as full‑stack visionary" stage yet, but we are getting there.

This website is not a template; it was vibe‑coded from scratch using a high‑token conversational history + markdown requirements in Google AI Studio. The LLM acted as a dedicated engineering partner, translating abstract aesthetic requests and requirements into production‑ready code.

Architectural Maturity: Post‑generation refinement in VS Code + Copilot corrected structural fragility. Cursor AI also helped with code refactoring, bug fixes, etc.

Custom-Built Tools: I designed additional features for seamless post‑launch publishing by one-shotting a standalone blog writing / simple publishing tool.

From concept to demo‑ready prototype in 13 days, showcasing exceptional project velocity.

Generated complex, conditional flows and state transitions at unprecedented speed.

Starting with a Figma artboard + Preline Figma, the prototype stuck to the design system.

Polish suitable for executive review + immediate user testing.

Creating BrowserFence in Figma Make bypassed traditional bottlenecks, proving high velocity and high fidelity aren’t mutually exclusive.

This rapid turnaround enabled earlier stakeholder feedback, more iteration cycles, and a validated, demo‑ready result in record time.

WAIT A MINUTE...

What should have been simple tweaks: things like line spacing, button colors, and tags/badges, often spiraled into hours of trial and error back-and-forth. Every “quick fix” exposed hidden conflicts between global styles, overrides, and the quirks of features like switchable light/dark modes. I’d fix one thing, and something else would break. At times, AI-generated code felt more like a blunt instrument than a scalpel: dropping hacks, duplicating rules, or stripping away the very styles that I added manually to make the site cohesive. Instead of accelerating me, it often slowed me down, forcing me to dig deeper into the mess just to restore what I already had in the previous version. There must be a better way...

The key to using AI effectively is developing systematic workflows that balance AI speed with human precision. Daily use of modern LLMs revealed limits: misattributed quotes, code hallucinations, and faulty advice. The most crucial output was creating precision protocols to deploy AI at scale while maintaining quality. Click a gate to learn more.

Prevents hallucination

Ensures brand alignment

Validates code integrity

Precision Protocols enable scale without sacrificing trust. They make AI contributions measurable, reviewable, and improvable, which turns speed into sustainable velocity.

In practice, these gates cut rework while raising the floor on quality, especially for complex UX work that touches multiple surfaces.

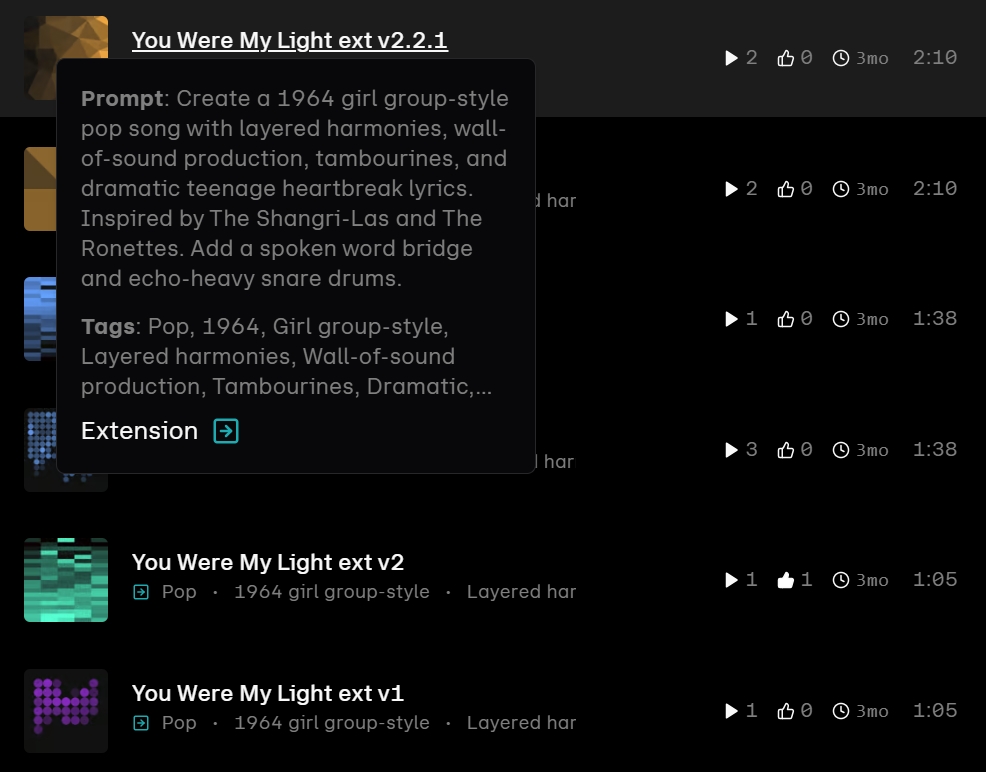

Not everything in this six-month AI journey was about workflows, velocity charts, and design systems. I also went down a rabbit hole trying to make AI music sound like it rolled straight out of 1964. Using Udio, I crafted prompts to channel the girl-group magic of The Shangri-Las and The Ronettes. Complete with layered harmonies, wall-of-sound production, tambourines, echo-heavy snares, even a spoken-word bridge. The result was The Phonettes, an imaginary 60s pop group that never existed, but could have. It was equal parts nostalgia experiment and technical challenge: proving that with the right direction, AI can evoke something surprisingly human, imperfect, and alive. Isn't that what design is really all about?

This six-month AI upskilling journey proved that velocity and quality don’t have to compete. The designer’s role is no longer defined by execution, but by curation, taste, and strategic guidance. Knowing which prompts to craft, which outputs to keep, and what feels right, relevant, and human.

AI can generate endlessly, but it’s our ability to curate, refine, and translate vision into value that makes the work resonate with people and drive business forward.